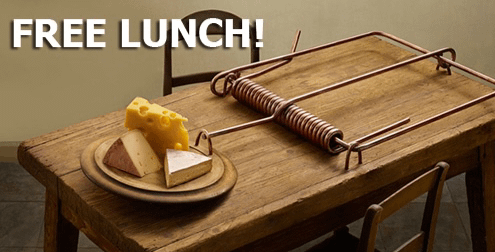

The AI Free Lunch is Over

Why Platform Teams Need to Prepare for the Cost Reckoning

Every second post across all my socials right now is about the wonders of ClaudeCode, Ralph, and now Clawdbot Mortbot.

The capabilities are real, and so are the costs. Federico Viticci over at MacStories managed to burn through 180 million API tokens in his first month - roughly $3,600 in API costs.

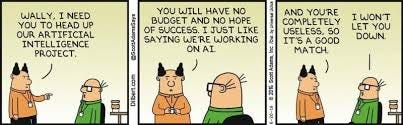

Platform engineering teams are about to inherit this AI cost problem. Every executive has seen a LinkedIn post about how agentic AI can magically turn ideas from an email into ready-to-deploy production code, and they want it now.

The patterns you’ve built for cloud governance, recharge models, and golden paths are about to become essential for AI too. The question is whether you’ll be ready.

The pricing landscape has shifted beneath everyone’s feet

The costs of large language models shifted significantly in 2025, and things got heated between vendors. In May, Anthropic introduced “Priority Tier”. By August, new weekly rate limits for Claude Pro and Max were added after the company admitted some subscribers were “consuming computing resources worth tens of thousands of dollars monthly”. Fast forward to 2026, Anthropic is at war with third parties and we’ll find out if Elon wins damages after OpenAI dropped its nonprofit mission.

Subscription tiers have proliferated and token limits have become a real constraint. Premium pricing for complex reasoning has emerged as its own category, with extended thinking modes and deep research capabilities commanding higher rates. Users are hitting Claude Code limits mid-session, finding that agentic features burn through allocations faster than anyone anticipated.

What makes this particularly challenging is the unpredictability. Unlike traditional infrastructure costs that scale somewhat linearly, AI spending follows the complexity of the task. A minor change in prompt structure can double inference costs overnight. An agentic workflow that tests well in development can blow through production budgets in hours.

IDC warns of an “AI infrastructure reckoning,” predicting that Global 1000 organisations will underestimate their AI infrastructure costs by 30% through 2027. That’s not a forecasting gap you can explain away in a quarterly review.

This will become a platform engineering problem

Platform teams are being pressured to enable AI capabilities across the SDLC, but nobody’s talking about governance, cost controls, or environmental impact. Execs want the magic they’ve seen in vendor demos, and developers want to replicate that cool thing they saw on Twitter last week. The platform team gets to figure out how to deliver it without creating the next cloud spending crisis.

Everyone is so excited by capability, that nobody is thinking about token economics or model capability tiers. It doesn’t help that most AI tools default to maximum capability and interfaces don’t surface cost information.

But when Opus 4.5 costs five times more than Haiku (that can handles routine tasks just as well) and Opus 4 incurs a 300% markup over the new Opus 4.5 model, that knowledge gap becomes expensive. A developer needing help with a function selects the most capable model, feeds it the entire codebase as context, and moves on unaware they just spent £2 on a £0.10 task. Multiply that by hundreds of developers, thousands of times daily, and you’ll find yourself in a very uncomfortable meeting before the quarter is done.

Platform teams can help close this gap by making cost-aware choices easier.

Golden paths steer users towards the most efficient model for each task.

Self-hosted, open source models like DeepSeek, Qwen, and Llama have become viable alternatives for many tasks.

Context caching can unlock a 90% token discount automatically.

Semantic code indexing retrieves targeted context instead of including entire code repositories through RAG.

Batch processing for async workloads halves costs without user intervention.

Surfacing estimated costs before execution encourages deliberate usage.

Per-team cost attribution makes spend visible to those who can influence it.

Quotas, spending limits, and recharge models provide guardrails and allocate costs to the relevant department.

The goal isn’t to slow people down; it’s to make the economical choice the path of least resistance.

The FinOps Sequel: AI Edition

If this feels familiar, it should! Platform engineering exists partly because of what happened during the public cloud boom. Back in 2020, Gartner estimated that 80% of organisations would overshoot their cloud IaaS budgets and today industry surveys still find that up to 32% of enterprise cloud spend is actually just wasted resources.

The organisational response was FinOps—financial operations practices that brought visibility and accountability to cloud spending. Platform teams became central to this work, building the tagging standards, cost allocation mechanisms, and governance frameworks that made cloud economics manageable.

AI spending is following the same trajectory, but compressed. Gartner estimates $644 billion will be spent on generative AI in 2025, with cost management emerging as a core challenge. IDC predicts that by 2027, 75% of organisations will combine GenAI with FinOps processes. The FinOps Foundation has already published frameworks for AI cost management, introducing metrics like cost-per-inference and cost-per-action for agentic systems.

The platform teams that built cloud governance muscle are well-positioned here. The concepts translate: visibility into consumption, allocation to cost centres, guardrails that enable rather than block, self-service within boundaries. The implementation details differ — tokens instead of compute hours, model selection instead of instance types — but the organisational patterns are the same.

What platform teams should do now

The pressure to enable AI capabilities isn’t going to go away. But platform teams have an opportunity to shape how their organisations adopt these capabilities, rather than cleaning up the mess afterward.

Build cost visibility before you need it. Implement token tracking by model, team, project, and use case, before finance comes asking. The organisations that built this infrastructure early in their cloud journeys had enormous advantages over those that retrofitted it later.

Design for vendor agnosticism. The model landscape is evolving too quickly to bet everything on one vendor. Very few organisations now have a single-cloud model. Provide your developers with a consistent interface that lets you change the underlying providers as economics and capabilities shift.

Extend your golden paths to include AI. If your platform provides opinionated, well-supported paths for common development tasks, AI integration should be part of that story. Don’t leave teams to figure out authentication, cost management, and best practices on their own.

Start the finance conversation proactively. Bring your CFO’s team into AI planning early. Establish governance frameworks before they’re urgently needed. Position your platform as the solution to AI cost governance, not just another source of AI capability requests.

Push back on unsustainable requests. Help stakeholders understand the cost implications of their choices and design solutions that balance capability with sustainability.

The AI free lunch was generous while it lasted. Platform teams that prepare now will be positioned to enable AI adoption that actually scales. Those that don’t will spend the next few years in reactive mode, building governance frameworks under pressure while costs spiral.

You’ve done this before with cloud. You know how the story goes.

For more perspectives on platform engineering, AI economics, and the human side of technical transformation, subscribe to The Chief Therapy Officer—monthly insights for technical leaders navigating organisational change.